SoundsGood

Do you understand your sound when you perform? How computer helps musician to improve? This project aims to create a mobile application letting musicians understand their music scientifically. Also to strike the balance between analytical representation of sound and musician’s understandability.

Deliverables

- Native iOS application

My Role

- Mobile App Developer

- UI/UX Designer

Story

Sounds can be difficult to explain, even to musicians or other instrument players. The subjective terms "Touching" and "Sounds good!" are frequently used.

I don't mean to imply that these expressions have no value. However, in band practice, or when a teacher is teaching their students, proper communication is required to offer suggestions and make adjustments. Therefore, we cannot only stick to some vague adjective.

Hmmm, seems like music can be complicated. 🥹

Yes! To understand, we can use a systematic, scientific approach. Physics students may learn that pitch are determine by the vibration of objects. The guitar string or vocal cord vibration for example.

I wonder if you’re familiar with traditional audio analysis. I think that everyone will experience some level of exposure, like the waveform shown on our built-in voice memo application. It refers to the loudness of the sound, which is the representation on amplitude against time.

However, this kind of analysis can only uncover a small portion of the audio. In this case, we can utilize the well-known algorithm - Fourier Transform to convert the time-domain signal to a frequency-domain. Therefore, we can gather more frequency information. For instance, frequency distribution and frequency distribution, common representation like spectrum and spectrogram.

But, does it help musician?

Definitely. But only to those in the know. Like the engineer who handled the mixing or mastering in music production.

According to my quick user research, the majority of the music players does not have such knowledge. And it is what drives me to create this application.

“How might we let musicians understand their music with their musical knowledge?“

App Development

AudioKit

We originally used Apple’s official framework AVFoundation for audio data capturing and manipulation, but we found that this package requires verbose code to implement simple functions.

Therefore, we need to seek a reliable alternative to solve this problem. That is why the AudioKit collection is introduced in SoundsGood.

We first setup the audio engine from AudioKit.

init() {

guard let input = engine.input else{

fatalError()

}

self.mic = input

self.fftMixer = Mixer(mic)

self.pitchMixer = Mixer(fftMixer)

self.silentMixer = Mixer(pitchMixer)

self.outputLimiter = PeakLimiter(silentMixer)

self.engine.output = outputLimiter

self.setupAudioEngine()

// ...

}For frequency analysis, we applied the FFTTap and PitchTap. And there are callback functions self.updateAmplitudes() ,self.updatePitch() where we put our business logic in.

private func setupAudioEngine() {

taps.append(FFTTap(fftMixer) { fftData in

DispatchQueue.main.async {

self.updateAmplitudes(fftData, mode: .scroll)

}

})

taps.append(PitchTap(pitchMixer) { pitchFrequency, amplitude in

DispatchQueue.main.async {

self.updatePitch(pitchFrequency: pitchFrequency, amplitude: amplitude)

}

})

self.toggle()

silentMixer.volume = 0.0

}Design Pattern

SwiftUI with MVVM. With the property wrapper provided by SwiftUI and Combine, we can easily build relations between View and ViewModel with less code.

We keep our logic in ViewModel, and UI related in View. The concepts of parent View Model and child View Model is also implemented.

Iterative Deliverables

Testing is critical in our design cycle. We embrace the “failing-fast” approach such that we can constantly update our design to meet users’ expectations.

We conducted usability test with our target user in each iterations. And we aim to discover problems and understand the behavior of our users from the test.

We did enhance and discover confusion in our app after each iteration.

Feature Showcase

- Various visualization of sound including frequency-domain, time-domain, and harmonics. ✨

- Describe your sound with a simple and intuitive interface. 📝

- Intergrated with Watch app and adaption for iPad.

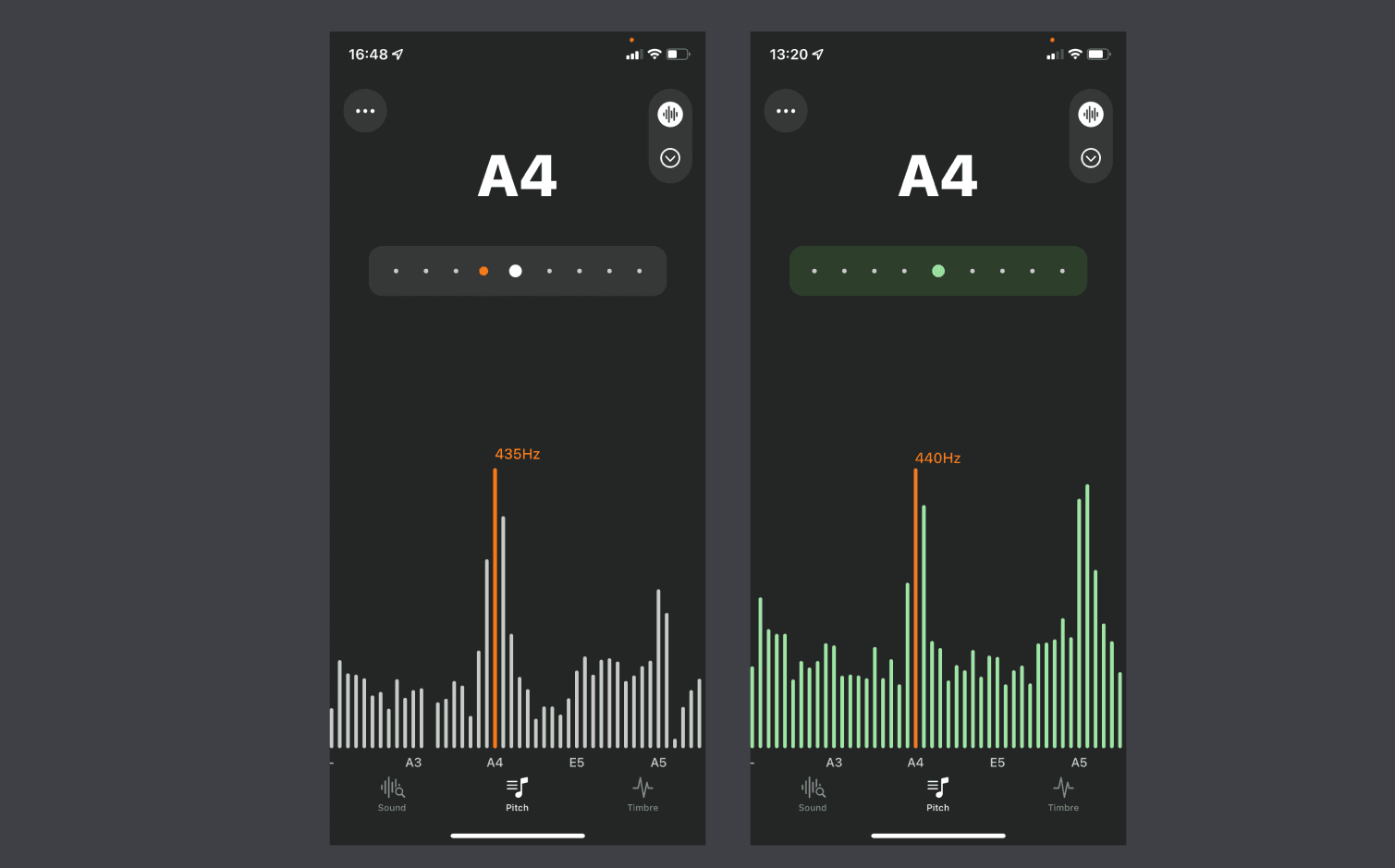

Pitch Detection

Just like many other music-related apps, we provided the pitch detection function. And of course, another reason is that musicians are familiar with, and cares the pitch a lot.

# Code snippet of converting frequency to pitch in pseudocode

function pitchFromFrequency:

steps <- 12 * log2(frequency / C0) # Semitones from C0 to frequency

octave <- steps / 12

n <- steps % 12

return notation[n] + octaveFrequency Visualization

To complement the pitch detection function, we highlight the peak frequency in our frequency visualization.

# Code snippet of calculating amplitude from FFT data in pseudocode

function updateAmplitudes:

# ...striding index i from 0 to 2048 by 2

real <- fftData[i]

imaginary <- fftData[i+1]

normalized <- 2*sqrt(pow(real,2)+pow(imaginary,2))/fftData.size

dB <- 20*log10(normalized)

# ... And we apply a “scroll and chase” method to present the fundamental peak frequency, which is the pitch, on our user’s screen.

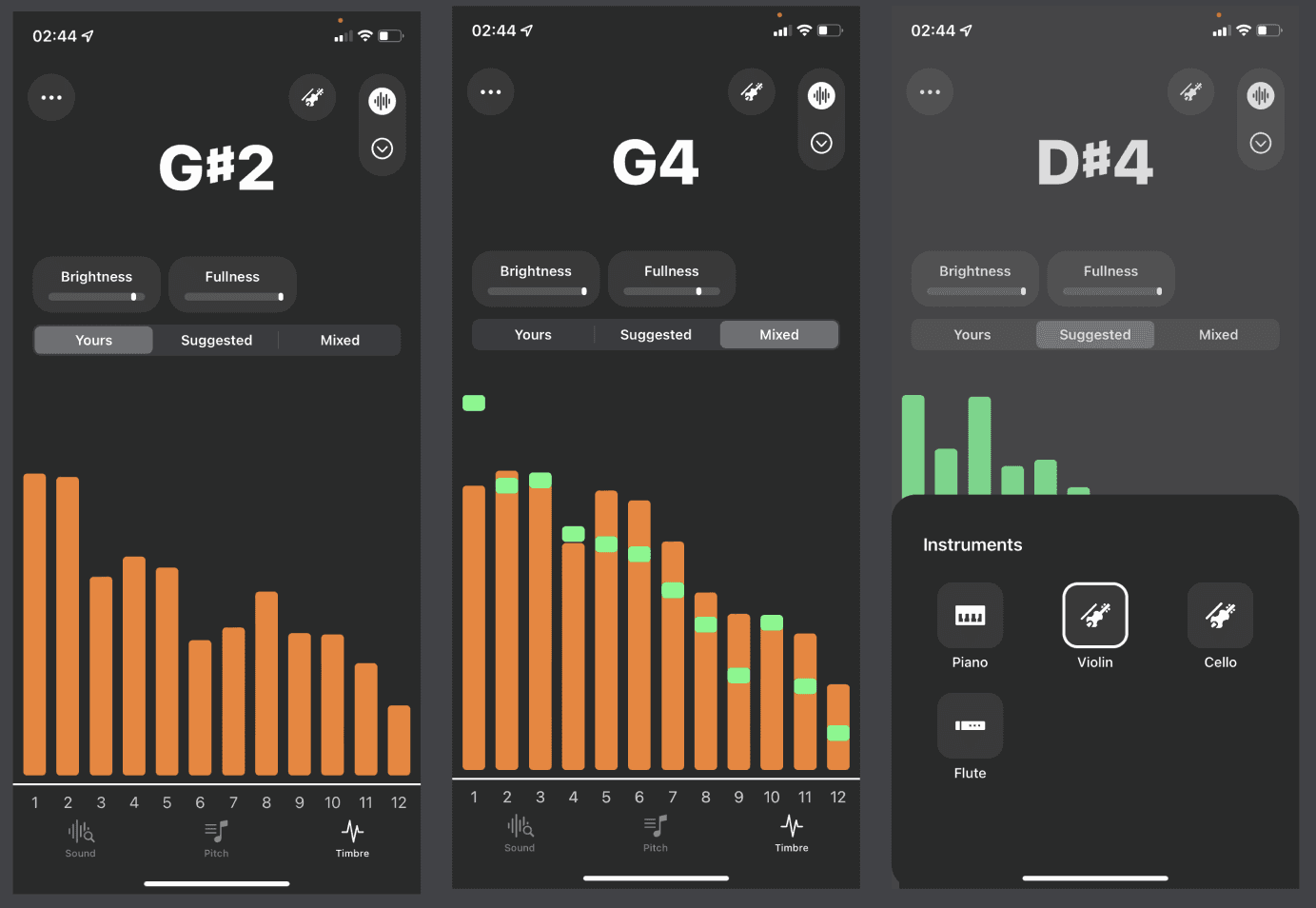

Timbre Suggestion

We need to identify some professionals to use as a standard in order to give our users a reference for their sound.

data, sr = librosa.load(audio_file, sr=44100)

FRAME_SIZE = 2048

HOP_SIZE = 512

stft_data = librosa.stft(data, n_fft=FRAME_SIZE, hop_length=HOP_SIZE)

# Extract columns (Range of frames)

sustain_data = np.abs(stft_data)[:, int(

stft_data.shape[1]/4): int(stft_data.shape[1]/2)]

# Take average of each rows

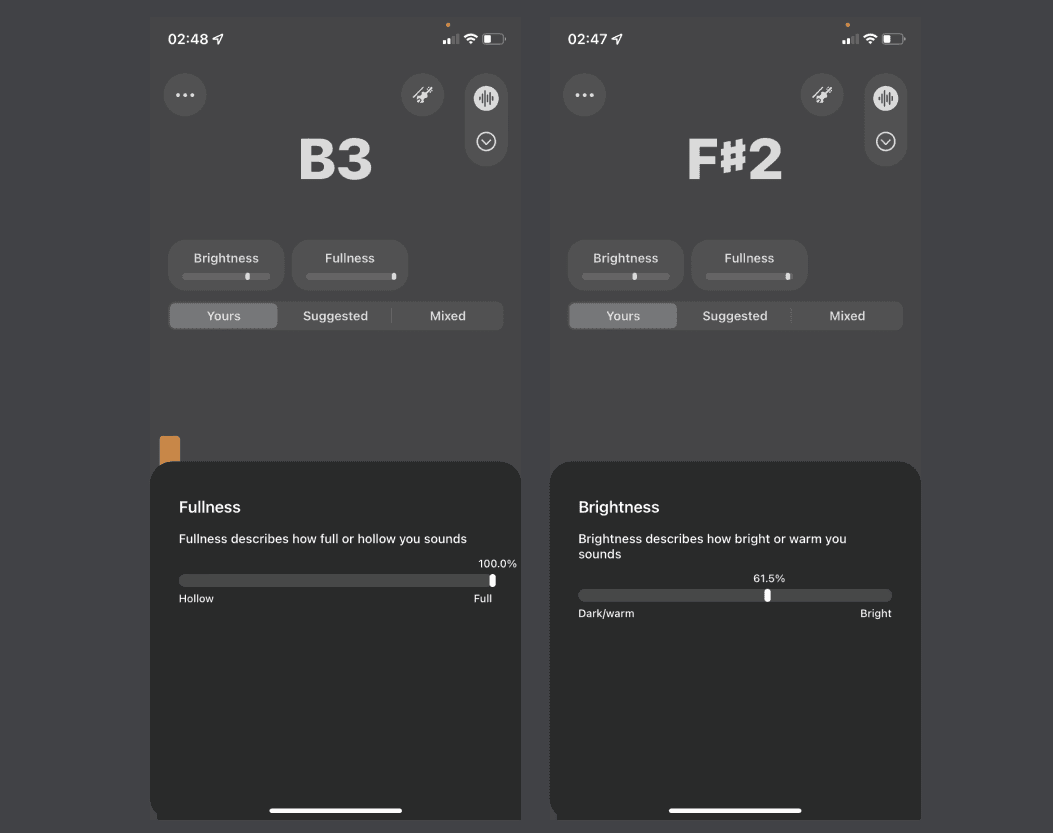

average_data = np.mean(sustain_data, axis=1)Timbre Attribute

We noticed from user feedback that musicians want more assistance in understanding. Our information has to be more explicit of what we're attempting to express.

There are certain timbre characteristics that musicians might use to describe their sound. We can convert our frequency data into relevant sound properties using some mathematical calculations.

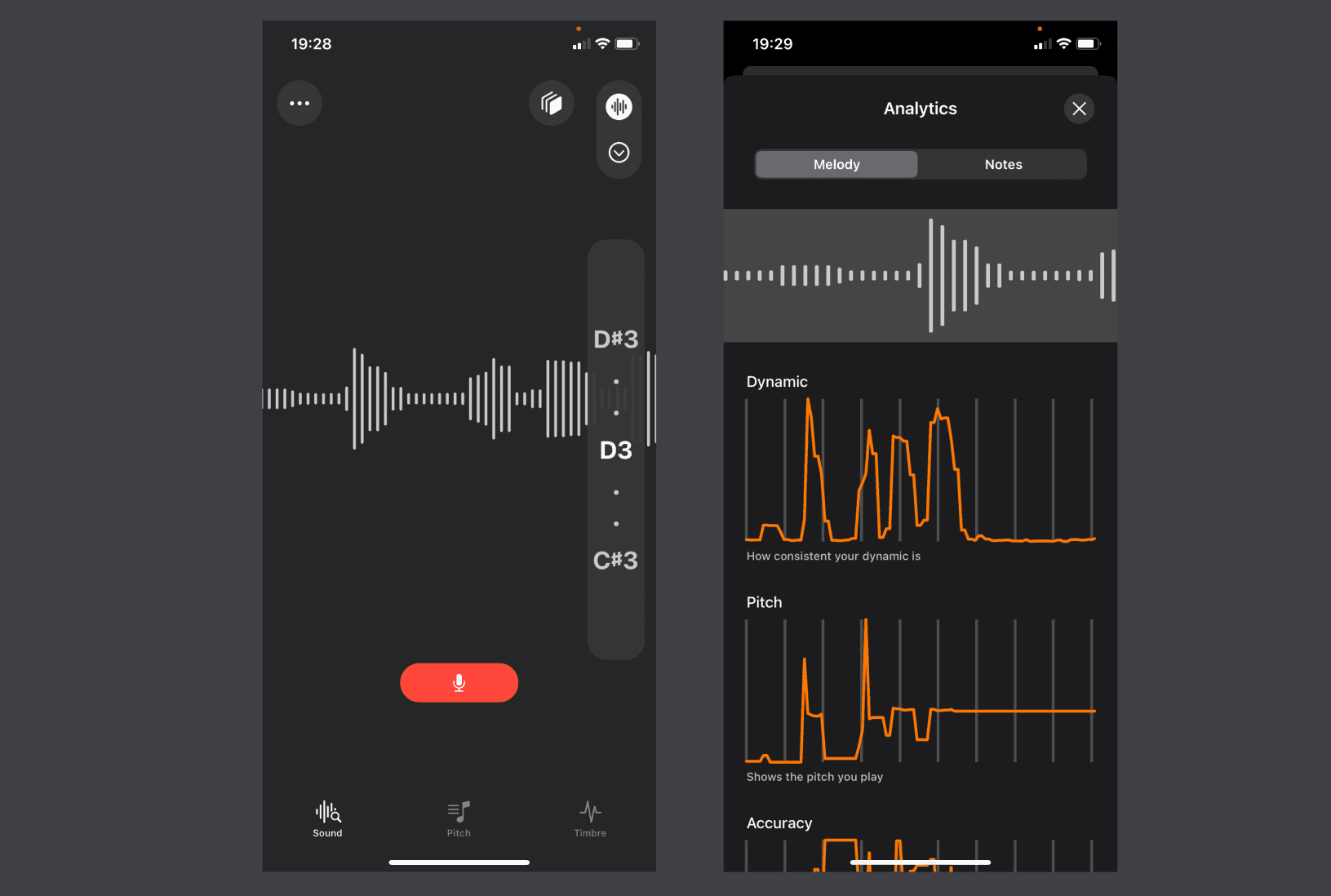

Recording Analysis

We want our users to be able to analyze a specific time period, such as a bass line or melody, in depth. We provide dynamic, pitch and pitch detune charts.

Additionally, our note splitting algorithm allows users to focus on the characteristics of each note. The ADSR envelope shaping or how the pitch changes for example.

func splitAudioBySilenceWithAmplitude(data: Array<RecordingData>, ampThreshold: Double) -> [[RecordingData]] {

// ...

// SPLITTING ALGORITHM (Consider silent point with amplitude threshold only)

// i is the position of the first silent point of the remaining recording array

// The head of RemainingData should be the start of a note in the loop when we decided to append the array

// We look for a silent point first, and look for another non-continuous silent point (A note is in between)

// We stored each note array into a array, making an array of array for the whole melody with each note as an array element

// While there is a silent point in the remaining data

while let i = remainingData.firstIndex(where: { abs($0.amplitude) < ampThreshold }) {

// We look for a silent point first, and look for another non-continuous silent point (A note is in between)

if (i > ignoreTimeThreshold) {

// Append the head till i (first silent point in remaining)

splittedAudio.append(Array(remainingData[..<i])) // That's the note we want!

} else {

// ...

}

// Keep only with the remaining ampitude data

remainingData = Array(remainingData[(i + 1)...])

}

// Handle case when the recording stop before the last note end

// ...

return splittedAudio

}Here is a video of me playing the bass with the app.

More…

To learn more on musical knowledge and audio processing, I encourage to take a look of my medium article, which is written in cantonese.

https://medium.com/computer-music-research-notes/你了解你演奏的聲音嗎-電腦如何輔助音樂人進步-7a88f9f5fb79

![[object Object]](/_next/image?url=https%3A%2F%2Fres.cloudinary.com%2Flaporatory%2Fimage%2Fupload%2Fv1660796555%2FSoundsGood%2Fwork-soundsgood-1_sopk48.png&w=3840&q=75)

![[object Object]](/_next/image?url=https%3A%2F%2Fres.cloudinary.com%2Flaporatory%2Fimage%2Fupload%2Fv1660796574%2FSoundsGood%2Fwork-soundsgood-2_vxolkq.png&w=3840&q=75)

![[object Object]](/_next/image?url=https%3A%2F%2Fres.cloudinary.com%2Flaporatory%2Fimage%2Fupload%2Fv1660796595%2FSoundsGood%2Fwork-soundsgood-3_fs4muh.jpg&w=3840&q=75)